Agentic AI

Security

Software Engineering

Securing AI Agents: Why Trust Breaks Before Models Do

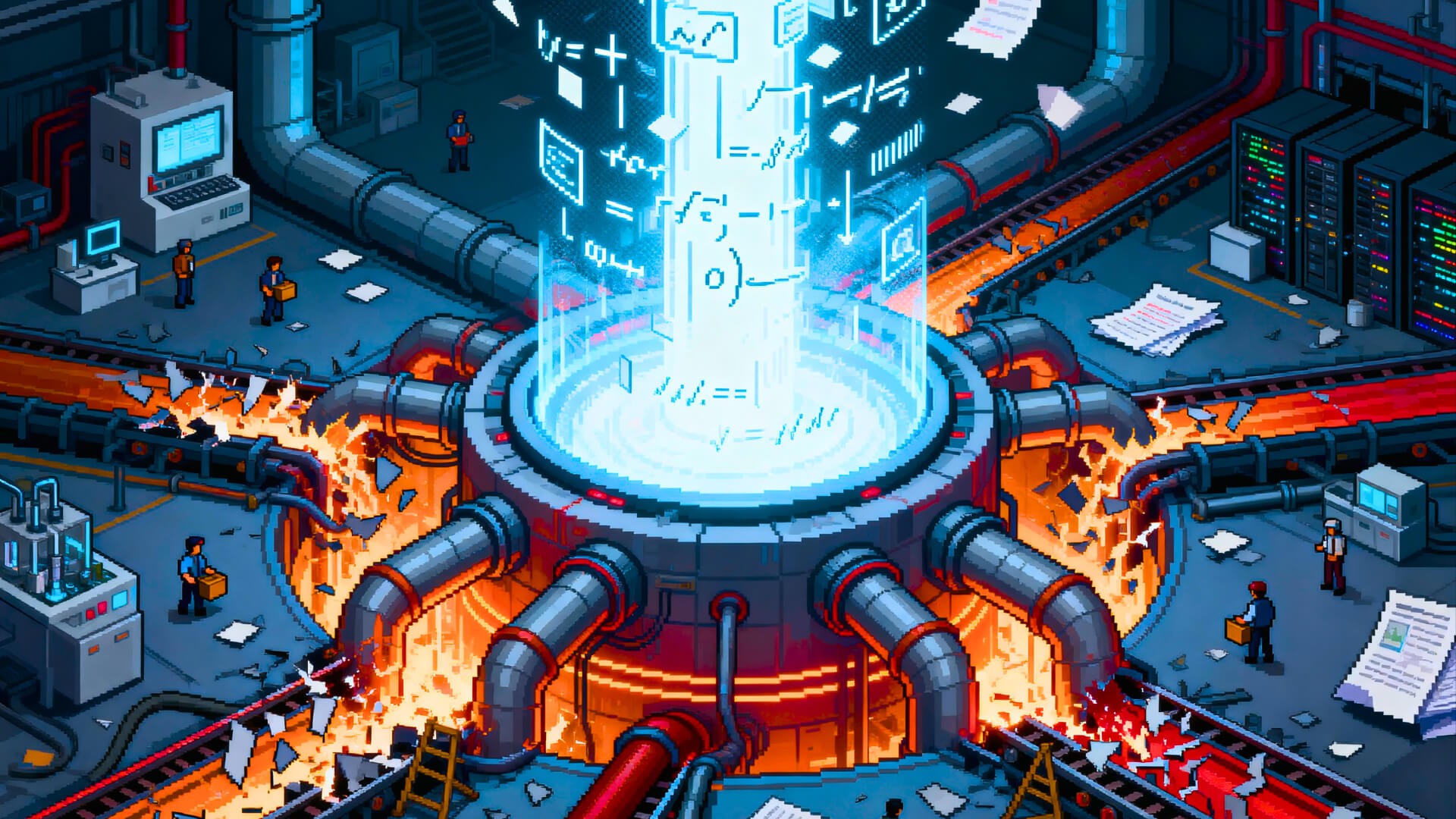

Most teams still treat AI agents like glorified scripts. That framing collapses the moment an agent can plan, act, and persist state. Security problems don't start with intelligence—they start with agency. The moment an agent can decide which API to call next, you've crossed from tool into actor.

Most teams still treat AI agents like glorified scripts—stateless helpers that execute commands and disappear. That framing collapses the moment an agent can plan, act, and persist state across sessions.

The security conversation has been stuck on model safety: alignment, hallucinations, jailbreaks. Important, sure. But the real exposure isn't intelligence. It's agency. The moment an agent can decide which API to call next—not just call one—you've crossed from tool into actor. And actors require a fundamentally different security posture.

Security problems don't start with intelligence. They start with agency.

What Actually Makes Agents Dangerous (and Useful)

Agents aren't risky because they're smart. They're risky because they operate across systems, chain actions together, and persist beyond a single request. That's also what makes them valuable.

The critical distinction: there's a canyon between calling an API and deciding which API to call next. The former is automation. The latter is autonomy. And autonomy amplifies everything—including mistakes.

OWASP's newly released Top 10 for Agentic Applications names "Cascading Failures" as a top-tier risk: a minor error in one component triggers a chain reaction across the system, with the agent's planner executing increasingly destructive actions in an attempt to recover. One bad decision doesn't stay contained. It propagates.

This extends to supply chain. Agents inherit the trustworthiness of every tool, plugin, and API they touch. A "secure" agent can still fail catastrophically through an unsafe dependency. Recent research documented 43 different agent framework components with embedded vulnerabilities introduced via supply chain compromise and many developers are still running outdated versions, unaware of the risk.

Agents don't act alone. Their supply chain is part of the attack surface.

Identity: Every Agent Needs One

Here's a pattern that keeps showing up in production incidents: agents operating under shared service accounts with no individual identity. When something goes wrong, teams can't attribute the action. Can't audit the decision chain. Can't revoke access without breaking everything else.

If you can't name an agent, you can't secure it.

McKinsey's analysis frames it precisely: think of AI agents as "digital insiders"—entities operating within systems with varying levels of privilege and authority. Like their human counterparts, these digital insiders can cause harm unintentionally through poor alignment, or deliberately if compromised. Already, 80 percent of organizations report encountering risky behaviours from AI agents, including improper data exposure and unauthorized system access.

Identity isn't about control for control's sake. It's about accountability over time—the ability to trace what happened, understand why, and prevent it from happening again.

Permissions: Least Privilege or Latent Breach

Broad permissions feel convenient until they fail catastrophically. And with agents, they fail faster than with humans—because agents execute at machine speed without the hesitation that might make a human pause before doing something questionable.

The OWASP framework identifies "Identity & Privilege Abuse" as a top-three agentic risk. The pattern: attackers exploit inherited credentials, delegated permissions, or agent-to-agent trust to escalate access. Three of the top four risks in their taxonomy are identity-focused.

The fix requires moving beyond static role-based access control. Agents need scoped capabilities—task-bounded access with time-limited credentials. The question isn't just "can it access?" but "should it ever decide?"

Some paths should remain untouchable regardless of technical permissions: revenue flows, compliance logic, user trust boundaries. These are business invariants, not access control lists.

Memory Is an Attack Surface

Persistent memory isn't harmless context. It's a vector.

Palo Alto's Unit 42 demonstrated that indirect prompt injection can poison an agent's long-term memory, allowing injected instructions to persist across sessions and potentially exfiltrate conversation history. Because memory contents get injected into system instructions, they're often prioritised over user input—amplifying the impact.

Princeton researchers went further, showing that malicious "memories" injected into an agent's stored context can trigger unauthorised asset transfers in financial systems. The attack required no complex tools—only careful prompting and access to stored memory. Unlike prompt injection, which is immediate, a poisoned memory persists indefinitely, influencing behaviour across sessions until discovered.

Memory must be scoped, inspectable, and erasable. An agent that remembers everything is an agent you can't fully trust.

There's another dimension here: temporal drift. Even without model changes, tools evolve, permissions shift, and context changes. Yesterday's safe memory becomes today's liability. Securing agents isn't a one-time setup—it's a continuous alignment problem.

Execution Boundaries: Where Agents Must Stop

Certain actions should require human confirmation regardless of how confident the agent is. Deploying directly to production. Modifying core business invariants. Escalating privileges autonomously. These aren't edge cases—they're the difference between controlled automation and uncontrolled liability.

BCG's framework calls this "guarded autonomy": humans stay in the loop by design, not accident. The recommendation is blunt: always include a kill switch. A healthcare provider should be able to halt an AI scheduling agent instantly if it starts double-booking critical equipment.

But boundaries aren't just about prevention. They're about recovery. Systems that can't roll back can't survive failure. Production safety isn't about never failing—it's about failing in ways you can reverse.

Observability: Security's Quiet Backbone

If you can't observe agent behaviour, you can't secure it. Full stop.

What matters isn't just logging that something happened. It's capturing the decision trace: what the agent saw, what it considered, what it chose, and why. The difference between "it worked" and "we know why it worked" is the difference between luck and engineering.

IBM's guidance is direct: the absence of agent monitoring is now one of the biggest technical and governance risks facing enterprise AI. Without observability, organisations lose the ability to explain outcomes or detect anomalies before they scale. A subtle prompt change can trigger a different decision tree. A token-level hallucination can propagate through a workflow and surface as a compliance breach.

There's a social dimension too. Engineers disable tools they can't reason about. Opacity kills adoption faster than bugs. An agent that violates team norms will be shut off before it ever violates policy.

Why Trust Collapses Before Capability

Most agent failures aren't model failures. They're boundary failures, permission failures, accountability failures.

The seductive notion of "read-only agents" as inherently safe misses the point. Influence is still action. Bad suggestions still cause damage. A compromised agent that can only recommend can still manipulate decisions at scale.

Trust breaks when systems act without explainability—not when they act incorrectly. Incorrect actions from transparent systems can be debugged and fixed. Opaque systems that happen to produce correct outputs are ticking time bombs.

Security as Enabler, Not Brake

Security isn't what slows agents down. Unclear boundaries do.

Agents with well-defined permissions, observable behaviour, and clear escalation paths move faster than agents operating in ambiguity. They break less. They get adopted. Teams trust them enough to delegate meaningful work.

The reframe: security is what makes scale possible. Without it, every deployment is a contained experiment that can't grow. With it, automation compounds.

What Securing Agents Unlocks

Get this right and you unlock what agents actually promise: safe multi-step automation, agentic workflows that span systems, and AI that can act without causing silent damage.

The organisations building this infrastructure now aren't just reducing risk. They're building the foundation for everything that comes next.

Power without boundaries isn't intelligence. It's liability.

Ardor is built for teams who understand that agentic infrastructure isn't optional—it's the difference between controlled automation and uncontrolled liability. Identity, permissions, observability, and execution boundaries aren't afterthoughts in our platform. They're foundational.

If you're ready to build agents that can actually ship to production, start here.