Enterprise AI

POV

The “95% AI Failure” Stat Is Real. The Interpretation Isn’t.

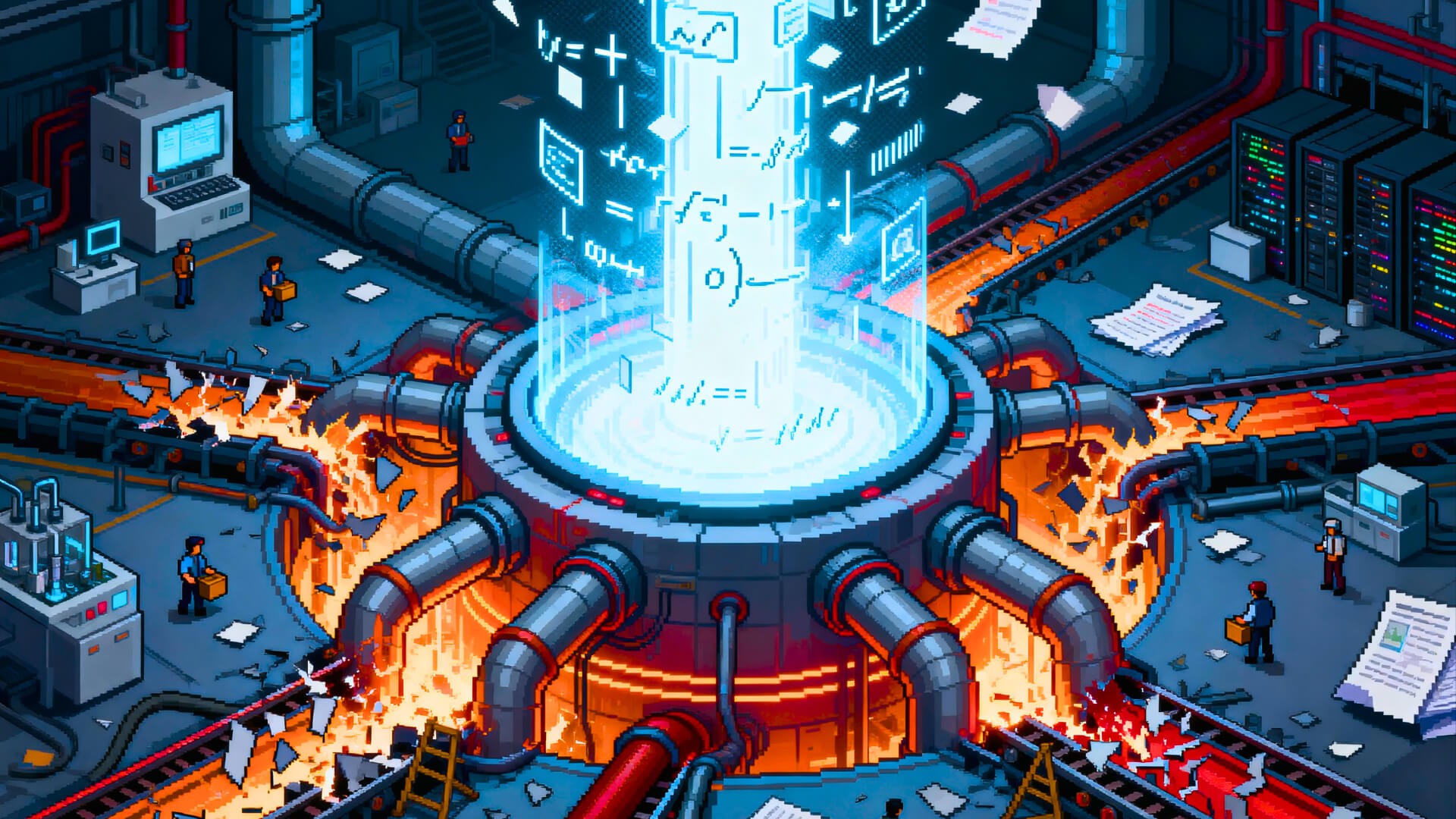

The “95% AI failure” stat is real. The conclusion isn’t. AI isn’t failing in enterprises—enterprise software is. When people bypass procurement to get real work done, that’s not chaos. It’s signal.

Every few months, a headline cycles through the enterprise tech press: "95% of AI pilots fail." It gets quoted in boardrooms, cited in procurement memos, used to justify caution. The number sounds authoritative. It comes from MIT research. And it's technically accurate.

The problem is what people think it means.

Reality doesn't match the narrative

If AI were failing at scale, you wouldn't see kernel developers using Cursor daily. You wouldn't see junior engineers shipping features faster than their seniors did five years ago. You wouldn't see design teams using Claude to prototype interactions before touching Figma.

The behavior doesn't match the claim. When something genuinely doesn't work, adoption stalls. People stop using it. AI usage is accelerating, not collapsing. That disconnect matters.

How nuance dies in translation

Here's the pattern: a researcher publishes findings with caveats. A headline writer extracts the most dramatic number. An executive reads the headline. The context vanishes.

This isn't malice. It's incentive-driven simplification. News sites optimize for clicks. Readers optimize for speed. Nuance dies in the middle. The result is a stat that's technically true but functionally misleading.

What the MIT report actually says

The Project NANDA research from MIT's Computer Science and Artificial Intelligence Laboratory breaks down like this:

Around 83% of generic LLM pilots—tools like ChatGPT Enterprise or GitHub Copilot—reach production. The oft-cited 95% ‘failure’ rate applies specifically to custom-built enterprise AI tools: bespoke models trained on proprietary data with specialized deployment requirements.

Meanwhile, roughly 90% of employees report using personal AI tools for work. About 40% of companies have purchased enterprise AI subscriptions. The report uses the term ‘shadow AI economy’ to describe this gap between official procurement and actual usage.

One clarification: reaching production doesn't guarantee ROI. But it does disprove the narrative that AI pilots fail because the technology doesn't work or because nobody wants to use it.

Context and limitations

Project NANDA is based on 52 interviews with enterprise decision-makers. The research team has clear incentives: they're positioning themselves as consultants who can help companies avoid pilot failure. That doesn't invalidate the findings, but it does explain the framing.

The sample size is small. The methodology relies on self-reporting. These aren't fatal flaws, but they're worth noting before treating the numbers as universal truth.

Shadow AI as signal, not failure

The "shadow AI economy" isn't evidence of recklessness. It's not a governance collapse. It's unmet demand.

When employees route around procurement to use tools that make them faster, that's not defiance. That's signal. People don't adopt tools that don't work. They abandon tools that waste their time. The fact that individuals are willing to pay for AI subscriptions with personal credit cards says more about utility than any pilot success metric.

Shadow AI isn't the problem. It's the canary in the coal mine.

The real failure mode

AI capability works. Adoption happens naturally when people control their own tooling. The breakdown occurs when enterprise software enters the picture.

Enterprise software, by definition, is bought top-down. It's optimized for compliance dashboards and vendor management processes. It's built for procurement teams, not practitioners. When AI gets forced through that system, it dies the same death as every other tool that arrived with a 47-page integration guide.

The failure isn't technical. It's organizational. Companies are trying to deploy AI using the same procurement and governance frameworks that turned many enterprise platforms into tools users tolerate rather than choose.

Why the misinterpretation matters

When executives believe "95% of AI fails," they add more oversight. More approval layers. More pilots stuck in staging environments waiting for compliance sign-off. The misread narrative creates the exact conditions that cause pilots to fail.

This becomes a self-fulfilling loop. Companies see the headline, tighten controls, watch pilots stall, then point to the stat as proof they were right to be cautious. Meanwhile, their employees are already using Claude and Cursor and Gemini because those tools don't require a procurement committee.

Name the problem precisely

AI pilots don't fail because the technology is immature. They fail because enterprises treat AI like enterprise software: something to be managed, governed, and controlled through layers of process designed for a different era.

The 95% stat is real. But it's measuring enterprise software dysfunction, not AI capability. Until companies recognize that distinction, they'll keep building pilots that die in staging while their employees build the future in shadow.

The failure isn't AI. It's enterprise software insisting it still controls how work gets done.

Sign up for Ardor, if you want to build AI products that actually make it out of staging.