Agentic SDLC

AI Infrastructure

Context Management

Beyond the Context Window: Building State Management for the Agentic SDLC

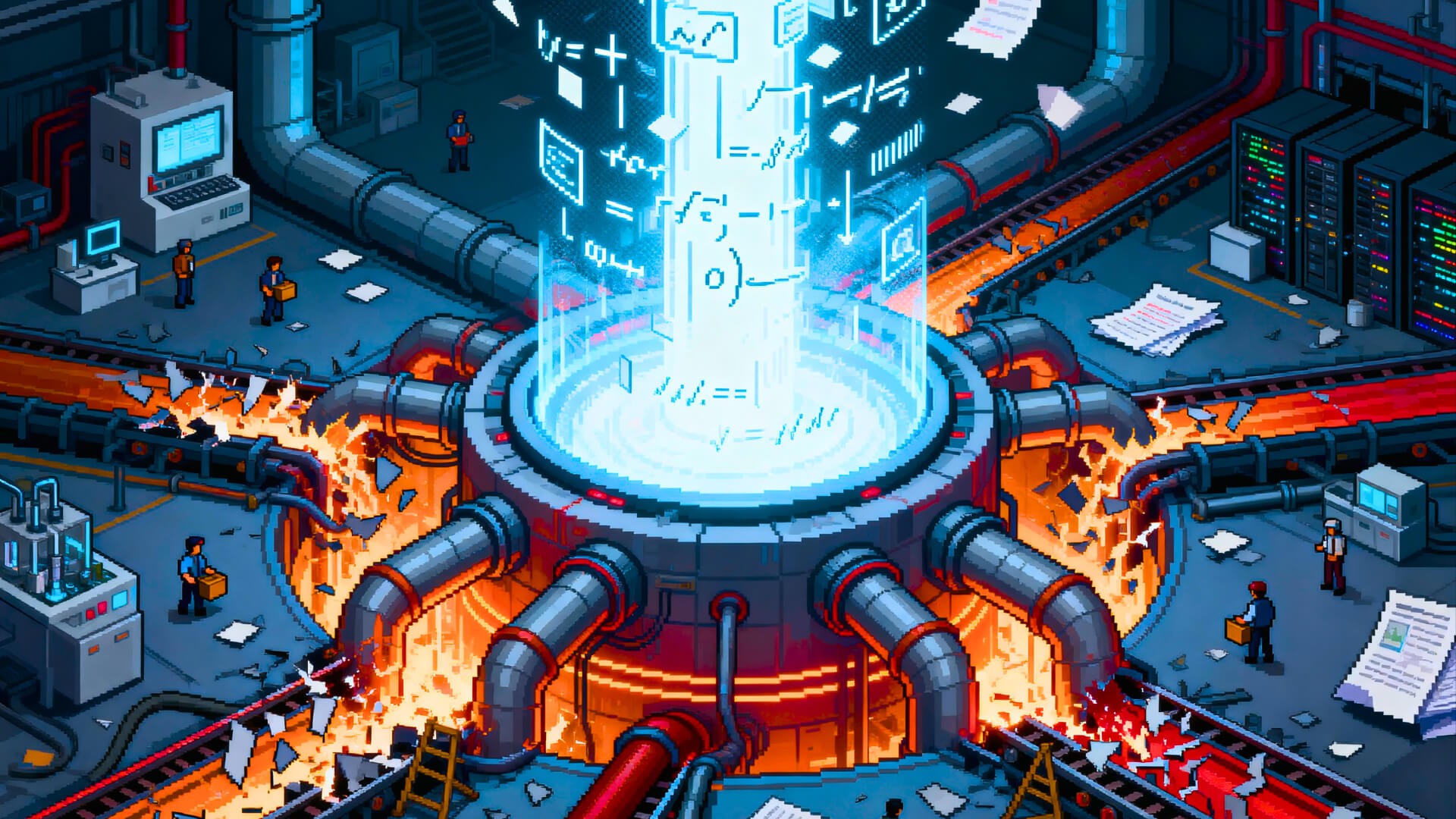

Bigger context windows didn’t fix AI in production. They exposed the real problem. The teams shipping agentic systems at scale aren’t stuffing millions of tokens into prompts—they’re building structured, persistent state across the entire SDLC. Context isn’t memory. It’s infrastructure.

The context window arms race is over. The real competition just started.

A year ago, 128,000 tokens was cutting-edge. Today, Claude offers 1 million. Gemini matches it. Llama 4 Scout pushes to 10 million. Magic.dev claims 100 million. The “stuff it all in” approach to AI finally became technically possible.

And yet, the enterprises actually shipping AI at scale aren’t just stuffing everything into massive context windows. They’re building something more sophisticated: persistent, structured context management that treats context not as memory, but as state.

The difference matters more than the token count ever did.

The Brute Force Trap

The intuition is seductive: bigger context window means better AI. Upload your entire codebase. Feed in all your documentation. Let the model figure it out.

Research tells a different story. Chroma’s study of 18 LLMs found that models don’t use their context uniformly—performance grows “increasingly unreliable as input length grows.” The “lost in the middle” phenomenon is real: models excel at retrieving information from the beginning and end of the context window, but struggle with content buried in the middle.

Databricks’ analysis confirms: “there are still limits to the capabilities of many long context models: many models show reduced performance at long context as evidenced by falling to follow instructions or producing repetitious outputs.”

Mechanically stuffing text into a context window is a brute-force strategy. It scatters attention, degrades answer quality, and—critically—costs a fortune. Computational overhead for processing long context grows non-linearly. Processing 200k+ tokens per request can cost $20 per call.

Raw context isn’t intelligence. It’s expensive noise.

RAG Isn’t Dead. It’s Evolving.

The “RAG vs long context” debate missed the point entirely.

For simple retrieval tasks with relatively static information, long context windows work fine. But enterprise AI doesn’t operate on simple retrieval. It operates on complex, multi-step workflows where context requirements shift dynamically.

The 2025 consensus is clear: “Improved long-context capabilities have not signalled RAG’s demise.” The practical question isn’t “RAG or long context?” It’s “how do you incorporate the most relevant and effective information into the model’s context with the best cost-performance ratio?”

That’s an infrastructure problem, not a model problem.

The winners aren’t choosing between RAG and long context. They’re building hybrid architectures that use both strategically—retrieval for precision, long context for synthesis, and structured orchestration to manage which approach applies when.

Context Isn’t Memory. It’s State.

Context engineering isn’t prompt engineering with more tokens. It’s state management for AI systems.

Here’s the insight that changes everything: for agentic AI systems, context isn’t about remembering conversations. It’s about maintaining state across complex, multi-step workflows.

Think about what an AI agent actually needs to do when building software:

Remember the requirements established three steps ago

Track which architectural decisions have been made

Know what code has already been generated

Understand what tests have passed or failed

Maintain awareness of deployment constraints

This isn't “memory” in the chatbot sense. It’s state—durable, structured, inspectable state that persists across the entire software development lifecycle.

Anthropic’s guidance on context engineering frames this precisely: “Building with language models is becoming less about finding the right words and phrases for your prompts, and more about answering the broader question of ‘what configuration of context is most likely to generate our model’s desired behavior?’”

Context engineering isn’t prompt engineering with more tokens. It’s state management for AI systems.

Why This Is an Infrastructure Problem

The major cloud providers have noticed. AWS launched AgentCore Memory for persistent context management. Microsoft added managed memory to Foundry Agent Service. Google is building similar capabilities.

The message is clear: context management is moving from application logic to infrastructure primitive.

But here’s the catch: these solutions solve the general problem of AI memory. They don’t solve the specific problem of managing context across a full software development lifecycle.

Building software isn’t a conversation. It’s a structured process with defined phases, artifacts, dependencies, and constraints. The context requirements for requirements gathering are different from architecture design, which are different from code generation, which are different from testing, which are different from deployment.

Generic memory systems can’t model this. You need context infrastructure purpose-built for the SDLC.

What SDLC-Native Context Looks Like

For AI to truly transform software development—not just accelerate pieces of it—context must flow through the entire lifecycle:

Requirements context informs architecture. What are the constraints? What are the non-negotiables? What’s the actual problem being solved?

Architecture context informs code generation. What patterns are we using? What are the service boundaries? What are the integration points?

Code context informs testing. What are the critical paths? What are the edge cases? What assumptions are baked into the implementation?

Testing context informs deployment. What’s been validated? What’s still risky? What monitoring needs to be in place?

Deployment context informs operations. What’s the rollback plan? What are the SLAs? What does healthy look like?

Each phase produces artifacts that become context for the next phase. Lose that context, and you’re back to stateless AI—generating code that doesn’t fit the architecture, writing tests that miss the critical paths, deploying without understanding the dependencies.

The platforms that win will be the ones that manage this context natively—not as an afterthought bolted onto code generation, but as the foundational infrastructure that makes coherent, end-to-end AI development possible.

The Competitive Implications

Context is becoming the new moat.

When every company has access to the same foundation models, the differentiator isn’t the model—it’s the context you can feed it. Your proprietary requirements, your architectural decisions, your codebase patterns, your operational knowledge.

Companies that build robust context infrastructure will compound their advantage over time. Each project generates context that makes the next project better. Each deployment adds operational knowledge. Each bug fix refines understanding.

Companies that treat AI as stateless tooling will reset to zero every time. No learning. No compounding. No moat.

The question isn’t “which AI model should we use?” It’s “what context infrastructure are we building, and who controls it?”

The Bottom Line

Million-token context windows are table stakes. What matters is what you do with them.

Stuffing everything in is expensive and unreliable. RAG alone is insufficient for complex workflows. The answer is structured context management—purpose-built for the workflows that matter to your business.

For software development, that means context infrastructure designed for the SDLC: requirements flowing to architecture flowing to code flowing to tests flowing to deployment, with state persisted and managed at every step.

Context isn’t just memory. It’s the operating system for AI-native development.

That’s what we’re building.

Ready to see context management designed for the full software development lifecycle?

Sign up to see how Ardor maintains structured context from first prompt to production deployment.