AI Research

Execution Orchestration

Systems Thinking

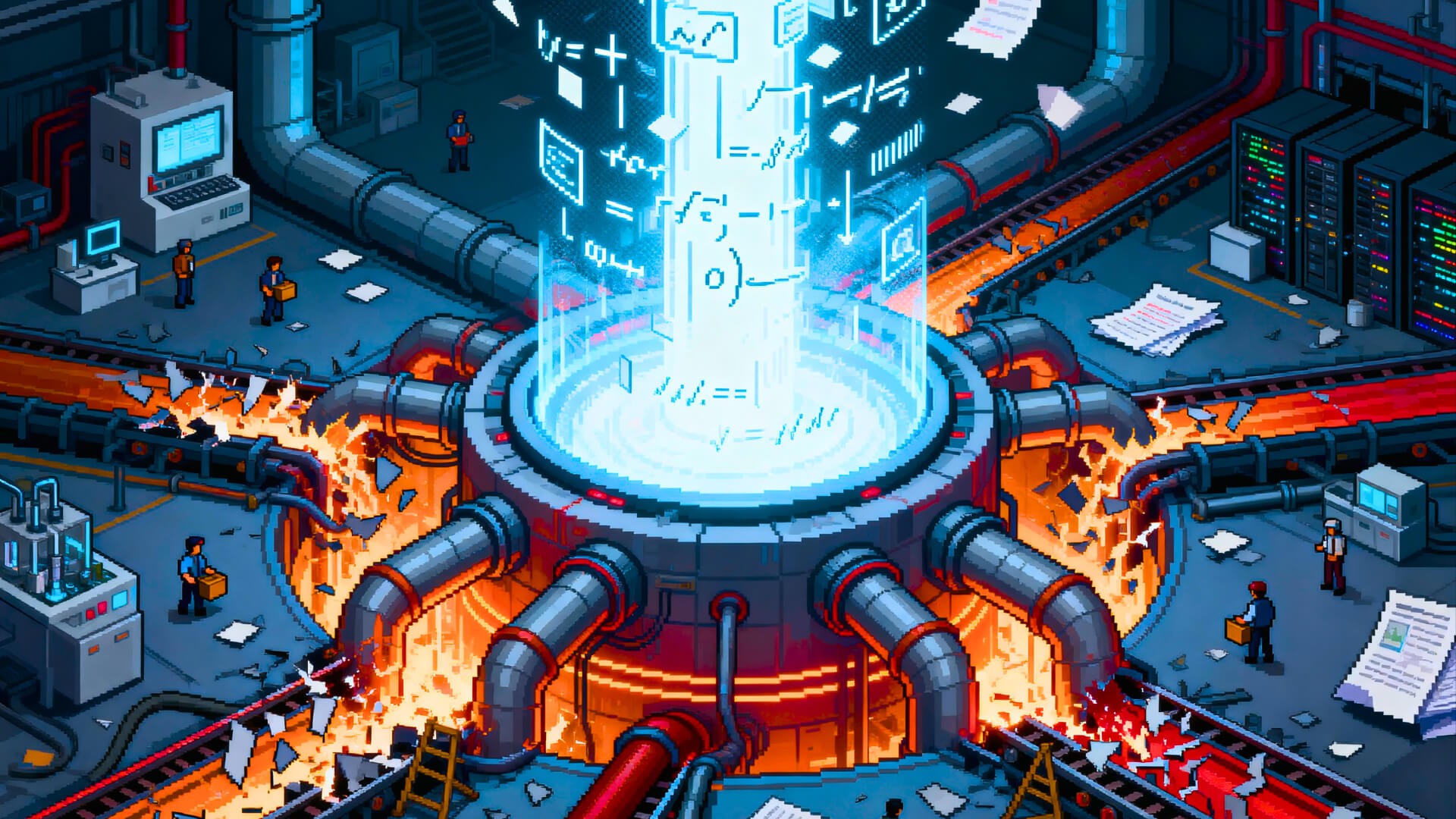

The Real Bottleneck in AI-Powered Research Isn’t Intelligence

Intelligence isn’t the bottleneck anymore. Execution is. AI models keep getting smarter, but serious research still feels slow because the hard part isn’t answering questions. It’s orchestrating messy documents, fragile workflows, and expensive compute into something that actually works.

Models are getting smarter. That much is obvious. Every quarter brings a new benchmark topped, another capability unlocked, another release notes section promising unprecedented reasoning and understanding. The press coverage writes itself.

And yet.

Serious research doesn't feel three times faster. Deep analysis doesn't complete in a tenth of the time. The practitioners doing the actual work—the senior engineers synthesising audit findings, the technical founders triangulating market intelligence, the researchers piecing together multi-source evidence—report something quieter and more frustrating: marginal gains, inconsistent results, and a nagging sense that the bottleneck has simply moved elsewhere.

A recent METR study found that experienced open-source developers estimated AI made them 20% faster, but when objectively measured, showed no statistically significant speed improvement. Faros AI's 2025 research across 10,000 developers revealed an even starker paradox: developers using AI completed 21% more tasks, but PR review time increased 91%, effectively neutralising the productivity gains. The downstream bottlenecks absorbed the value created by the intelligence itself.

Intelligence, it turns out, is now relatively cheap for many document-heavy, analysis-driven research workflows. Friction is not.

So why does serious research still feel slow?

What Real Research Actually Looks Like

None of these studies are decisive on their own. But taken together, they point to a consistent pattern.

Demonstrations are seductive. A chatbot ingesting a white paper and answering questions about it? Impressive. A model summarising a 50-page report in seconds? Genuinely useful for a quick skim.

But that isn't research. That's a parlour trick often mistaken for productivity.

Real research looks messier. It involves a 200-page PDF with scanned appendices and tables that don't render correctly. It means cross-referencing a regulatory filing against three academic papers, two earnings call transcripts, and a technical specification that lives somewhere in a shared drive nobody remembers creating. It requires tracking provenance, understanding context, and maintaining coherence across dozens of dependent steps.

The distinction matters: answering questions is not the same as moving work forward. Research is not a single query with a satisfying response. It's a chain of dependent actions where each step creates context for the next—and where the failure of any single step compromises everything downstream.

Organisations face steep accuracy challenges when dealing with lengthy PDFs, and with some tools, "lengthy" can mean anything beyond eight pages. Data extraction accuracy with general AI drops 30-40% on legacy documents compared to specialised systems. A law firm using general-purpose AI to summarise a 200-page merger agreement missed a buried termination clause—resulting in a $2 million exposure—because the model fragmented the document and failed to connect related provisions across chunks.

This is the gap between demo intelligence and operational intelligence. The former makes for compelling product videos. The latter determines whether work actually gets done.

Where AI Systems Start to Break

When you run serious research through current AI tools, you encounter failure modes that have nothing to do with how smart the model is.

Documents take too long to ingest. Large, complex files—the kind that constitute 90% of professional research—exceed context windows, requiring chunking strategies that inevitably lose semantic connections. Tables, figures, and multi-column layouts confuse extraction pipelines. PDFs are built for visual presentation, not machine understanding. The AI sees a flat stream of text with no clear boundaries or hierarchy, while charts are saved as images that the model simply ignores.

Agent pipelines are fragile. Gartner reported a 1,445% surge in multi-agent system inquiries from Q1 2024 to Q2 2025, signalling a shift in how systems are being designed. But the operational reality is sobering: 71% of organisations now cite agentic system complexity as their dominant hurdle, up from 39%. Inter-agent communication protocols, state management across agent boundaries, conflict resolution mechanisms—these become core challenges that didn't exist in single-agent systems.

Execution is opaque. When a research task runs, you often don't know what actually happened. Which sources were consulted? What was the reasoning chain? Where did the logic branch? Without observability, debugging becomes guesswork and trust erodes with each unexplained failure.

These are not intelligence failures. The model "knows" the answer in some abstract sense. These are engineering and orchestration failures—the mundane, unsexy problems of systems integration that persist long after the model itself achieves impressive benchmark scores.

At scale, intelligence isn't scarce. Coordination is.

The Hidden Constraint: Execution Under Compute Limits

There's a common assumption that compute problems solve themselves. Models get cheaper, hardware improves, and what was expensive yesterday becomes trivial tomorrow.

This is partially true. LLM inference costs have dropped by a factor of 1,000 in three years—from $60 per million tokens when GPT-3 launched to fractions of a cent for equivalent capability today. The curve is steeper than Moore's Law and shows no sign of flattening.

But cheaper doesn't mean free. And for serious research work, the constraint isn't the cost of a single query—it's the cost of the thousands of queries required to complete a complex task reliably.

Consider a research workflow that requires ingesting 50 documents, cross-referencing entities across them, validating claims against primary sources, and synthesising findings into a coherent analysis. Even at current prices, the compute costs accumulate. More importantly, the latency compounds. Each step waits on the previous one. Each retry burns additional credits. Each failure cascades.

Tier-1 financial institutions are spending up to $20 million daily on generative AI costs. At that scale, a 10% efficiency improvement isn't an optimisation—it's a strategic necessity.

The mindset shift required is subtle but important. "Just add more compute" does not scale as a strategy. Serious users care about optimality, not just correctness. They need answers that are not only accurate but efficient—achieved with minimal waste, minimal latency, and minimal cost.

When every task is expensive, how you execute matters more than what you know.

A Better Way to Frame the Problem

The industry has spent the past several years optimising for a particular framing: AI as assistant, answering questions, responding to prompts, generating text on demand. This framing was useful for adoption. It's becoming limiting for productivity.

The reframe required is from assistants to systems. An assistant responds. A system executes. An assistant answers questions about your documents. A system processes those documents, extracts structured data, validates it against external sources, and produces actionable output—without requiring you to babysit each step.

This means moving from answers to execution. The value isn't in the model's response to a single prompt. It's in the model's ability to complete a multi-step workflow reliably, handling edge cases, recovering from failures, and producing consistent results across diverse inputs.

Most importantly, it requires moving from smarter models to better orchestration. A Nature study analysing 41.3 million research papers found that scientists using AI publish 3.02 times more papers and receive 4.84 times more citations. But the collective effect was a narrowing of scientific focus by 4.63% and a 22% decrease in engagement between scientists. Individual capability expanded. Systemic productivity contracted.

This is one dimension of the paradox that better orchestration could address. The challenge isn't making individual researchers more prolific—current tools already do that. The challenge is ensuring that prolificacy translates into genuine progress rather than fragmented activity.

The next meaningful gains in research productivity won't come from incrementally smarter models. They'll come from systems that handle complexity, context, and computation coherently—orchestrating intelligence rather than simply deploying it.

What Matters Next

Intelligence will keep improving. The benchmark scores will continue their upward march. The press releases will announce new capabilities with appropriate fanfare. None of that is in question.

But the gap between capability and productivity will persist unless execution improves. The bottleneck has moved from "can the model do this?" to "can the system complete this reliably, efficiently, and at scale?"

The teams that recognise this shift will build differently. They'll invest less in prompt engineering and more in workflow orchestration. They'll care less about which model tops the leaderboard and more about how tasks flow through their systems. They'll measure success not by the impressiveness of individual outputs but by the consistency of end-to-end results.

The winners won't look flashier. They'll feel calmer, faster, more reliable.

Some teams are already building for this shift.

Build research systems, not prompts

If your work involves large documents, complex workflows, or compute-heavy analysis, Ardor is built for that reality.

Ardor helps you orchestrate research execution end-to-end—from ingestion to output—without babysitting every step.