Agentic Systems

AI Infrastructure

LLMOps

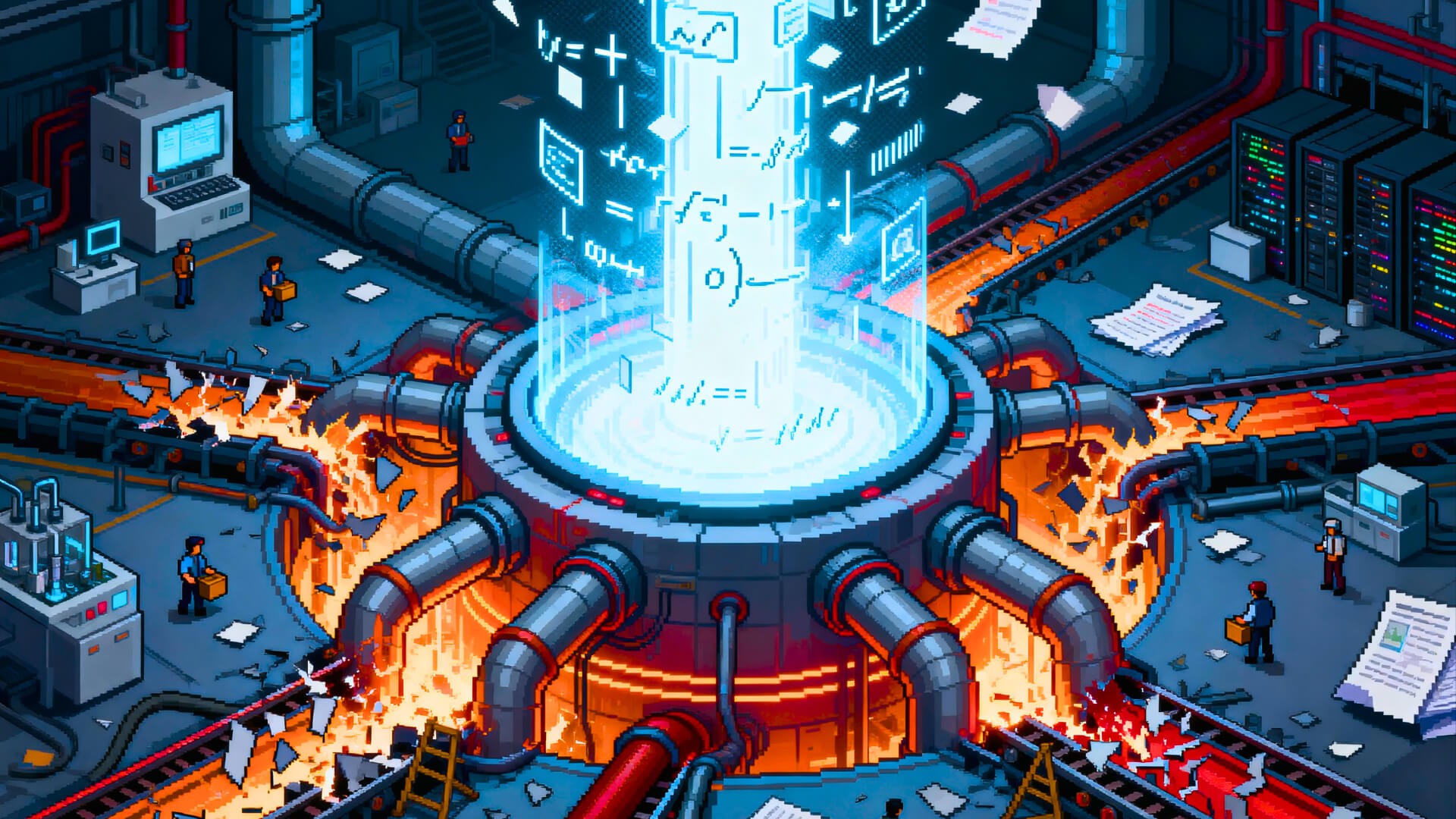

Building Production-Grade AI Agents: Why 95% Never Make It Past the Demo

Everyone’s building AI agents. Almost no one’s shipping them. This article breaks down why 95% of agents never make it past the demo—and what production-grade systems actually require beyond prompts and models.

Everyone’s building agents. Almost no one’s shipping them.

Out of 1,837 engineering leaders surveyed by Cleanlab, only 95 reported having AI agents live in production. That’s roughly 5%. And even among that tiny minority, most are “still early in capability, control, and transparency.”

The industry has an agent problem. It’s not a model problem or a prompting problem. It’s an infrastructure problem—and until teams treat agents as software systems rather than clever prompts, the gap between demo and production will keep claiming projects.

The Gap Nobody Talks About

The numbers paint a brutal picture:

62% of organisations are experimenting with AI agents, but fewer than 10% have scaled them in any single business function (McKinsey, 2025)

Only 16% of enterprise deployments qualify as “true agents”—systems where an LLM plans, executes, observes feedback, and adapts. The rest are fixed-sequence workflows wrapped around a single model call (Menlo Ventures, 2025)

70% of regulated enterprises rebuild their AI agent stack every three months or faster (Cleanlab, 2025)

Strip away the marketing and most “AI agents” are basic if-then logic around a model call. That works for demos. It doesn’t work when your agent needs to handle edge cases at 3am without human intervention.

Why Agents Fail: It’s Not the Model

The instinct is to blame the LLM. Better model, better agent, right?

Wrong. The model is rarely the bottleneck. The failures happen in everything around the model:

1. No failure handling

Agents fail. Models hallucinate. APIs timeout. Tool calls return unexpected data. Production agents need graceful degradation, retry logic, and fallback paths. Demo agents assume everything works.

A study of agent failure patterns found that hallucinated facts don’t stay contained—they cascade. One phantom SKU triggers pricing errors, inventory checks, shipping labels, and customer confirmations. By the time monitoring catches it, four systems are poisoned.

2. No state management

Agents that work in stateless demos collapse when they need to maintain context across sessions, remember what they’ve done, or coordinate with other agents. State isn’t a nice-to-have. It’s the difference between “assistant” and “autonomous system.”

3. No observability

You can’t fix what you can’t see. Yet fewer than one in three production teams are satisfied with their observability and guardrail solutions. 62% plan to improve observability in the next year making it the single most urgent investment area.

When your agent makes a decision at 2am, can you trace exactly why? Can you replay the context it had? Can you identify which step in a multi-step workflow went wrong?

4. No orchestration

Real agents don’t operate in isolation. They call tools, query databases, trigger workflows, coordinate with other agents. Orchestrating these interactions—handling parallelism, managing dependencies, recovering from partial failures—is where most agent architectures fall apart.

What Production Actually Requires

The teams successfully running agents in production have figured out something the demo-builders haven’t: agents are software, and software requires infrastructure.

Here’s what that looks like in practice:

Orchestration as a first-class concern

Production agents need explicit workflow management: what happens when step 3 fails? How do you retry step 2 without re-running step 1? What’s the timeout policy? Where are the human-in-the-loop checkpoints?

This isn’t prompt engineering. It’s systems engineering.

State that persists and recovers

Agents need memory that survives across sessions, context that can be inspected and modified, and state that can be rolled back when things go wrong. The Google Cloud CTO office describes this as the critical difference: “An LLM is a brain in a jar that knows facts. An agent is that same brain with hands and a plan. It uses logic to break down goals, tools to interact with the world, and memory so it doesn’t repeat mistakes.”

Observability built in, not bolted on

The best teams treat agent observability like application observability: traces for every decision, logs for every tool call, metrics for latency and error rates. When something goes wrong, you need a full stack trace. Not just “the model said something weird.”

Failure modes as design constraints

Production agents are designed around failure. What happens when the LLM hallucinates? What happens when a tool returns malformed data? What happens when context exceeds the window? These aren’t edge cases to handle later. They’re architectural requirements.

The Infrastructure Gap

Here’s the uncomfortable truth: most teams don’t have the infrastructure to run agents in production.

They have:

A model API

A prompting framework

Maybe some RAG

Hope

They don’t have:

Workflow orchestration with failure recovery

Persistent, inspectable state management

Production-grade observability

Systematic evaluation and testing

Building this infrastructure from scratch takes months. Maintaining it as models and frameworks evolve takes a dedicated team. 70% of regulated enterprises are rebuilding their stack every quarter just to keep up.

This is why the “experiment everywhere” phase is giving way to platform consolidation. Teams are realising that the path to production agents isn’t “better prompts”. It’s better infrastructure.

The Path Forward

If you’re stuck in the demo-to-production gap, here’s what actually moves the needle:

Start with the failure modes, not the happy path. Design your agent around what happens when things go wrong. The happy path is easy. The error handling is where production lives.

Treat state as infrastructure. Don’t bolt memory onto your agent as an afterthought. Design your state model first: what needs to persist, what needs to be inspectable, what needs to be recoverable.

Invest in observability early. You will not be able to debug production agents without traces. Build this in from day one, not after the first incident.

Choose your orchestration layer deliberately. This isn’t about which framework is trendiest. It’s about which one gives you the control you need when things break.

Accept that the stack will change. Build for modularity. The model you’re using today won’t be the model you’re using in six months. The framework you chose might not exist in a year. Design for replaceability.

The Bottom Line

The gap between agent demos and agent software is where most projects die. Closing that gap isn’t about better prompts or smarter models. It’s about treating agents as production systems that need real infrastructure: orchestration, state, observability, and failure handling.

The teams shipping agents to production aren’t the ones with the cleverest prompts. They’re the ones who understood, early, that agents are software, and built accordingly.

That’s the work we’re doing.

Ready to move your agents from demo to production?

Sign up to see how Ardor handles orchestration, state, and observability for the full agentic SDLC.